It’s no longer science fiction. Artificial Intelligence (AI) has stormed the digital battleground, and savvy cybercriminals are unleashing attacks with the speed and cunning of a machine that never needs coffee breaks. Businesses now face an unprecedented wave of AI-powered cyber threats—which change shape midstream, vanish from traditional radars, and target your most vital assets with alarming precision.

Yet, here lies both the challenge and the opportunity. AI isn’t just your nemesis, it’s also your best ally in the fight for business security. Just as attackers leverage AI to create more devastating threats, defenders can harness AI-driven solutions to automate detection, counteract persistent intrusions, and build digital defenses that would make even the most seasoned hacker think twice.

This definitive guide arms business leaders, cybersecurity professionals, and IT decision makers with the knowledge and tools needed to stay ahead. We’ll break down the evolving landscape of AI-enabled cybercrime, spotlight strategic AI-powered prevention and detection technologies, walk you through building an airtight cybersecurity preparedness plan, and tackle the knotty issues of complexity, ethics, and ever-shifting compliance regulations. Ready to future-proof your organization? Let’s get started!

Table of Contents

- Understanding AI-Enabled Cyber Threats: Types, Actors, and Attack Vectors

- Technical, Ethical, and Regulatory Challenges in AI Cybersecurity Adoption

- Strategic AI-Powered Cybersecurity Solutions and Tools for Prevention and Detection

- Developing Comprehensive Business Security and Cybersecurity Preparedness Plans Integrating AI

- Managing Increasing Complexity and Human Factors in Cybersecurity Amid AI Advancements

- Conclusion

- References

Understanding AI-Enabled Cyber Threats: Types, Actors, and Attack Vectors

AI-enabled cyber threats are rewriting the ground rules of digital security. Automated, adaptive, and alarmingly persistent, these threats can outmaneuver legacy defenses and must be met with equally innovative countermeasures. Here, we demystify the landscape—breaking down what makes AI-powered attacks unique, who the masterminds are, and how these digital assaults keep business leaders on their toes.

What Are AI-Enabled Cyber Attacks and Their Unique Features?

AI-enabled cyber attacks represent a paradigm shift. Instead of static lines of malicious code, today’s adversaries unleash “smart” attacks that constantly learn and refine their tactics. So, what does this really mean for your business?

First, automation is their weapon. AI engines can churn out dazzlingly convincing phishing emails, design mutating (yes, mutating) malware that continually shifts signatures, and even generate deepfakes for fraud attempts—all at machine speed and scale. CrowdStrike threat intelligence reports have documented AI-powered ransomware strains that adapt their evasion techniques on the fly, making them far more elusive than their garden-variety ancestors1. PurpleSec’s artificial intelligence experts warn that these capabilities enable attackers to “create sophisticated phishing emails, mutating malware, and deepfakes, making traditional defenses insufficient without AI-driven responses2.”

Phishing, once marked by clumsy grammar and suspicious links, now looks like an email from a trusted colleague—complete with personalized details culled by AI from social media and previous correspondence. Not only do these tools escalate the scale of attacks, but they also hyper-personalize them, dramatically increasing their success rates.

Malware and ransomware leverage AI to analyze their environment and reprogram themselves if they sense defensive tools closing in. This “shape-shifting” makes detection akin to fighting a digital Hydra: chop off one head, and two new variants pop up.

Advanced Persistent Threats (APTs) in the AI Era

Then there are Advanced Persistent Threats (APTs)—the James Bonds of cyberattacks. These are not your run-of-the-mill “smash and grab” jobs. Instead, APTs pursue long-haul infiltration, stealthily burrowing into networks to exfiltrate data, conduct espionage, or sabotage operations. The bad news? AI now powers their toolkits, supercharging their ability to remain undetected.

Research from CrowdStrike and Fortinet describes how modern APT groups, like APT28 and APT34, deploy AI-driven reconnaissance bots to map a target’s network, automate privilege escalation, and even select which data to steal or corrupt based on real-time analysis1,3. Technical breakdowns from TechTarget explain that an APT typically unfolds in phases: initial access (often via AI-powered spear phishing), internal lateral movements using AI-guided credential harvesting, persistent data collection with stealthy techniques, and finally, data exfiltration or system sabotage3.

The challenge is detection. AI enhancement means APTs can “live off the land”—using legitimate tools and user behaviors to cloak their actions. Telltale signs might include irregular system processes, abnormal account activity, or data transfers that are minutely anomalous—subtle, almost invisible to the untrained eye or traditional security alerts.

For more technical insight on how APTs operate and how to recognize them, consult TechTarget’s APT definitions and detection resources.

Who Are the Main Actors Behind AI-Driven Cyber Threats?

The digital villains don’t all wear the same mask. AI-powered cyber threats arise from a diverse cast of characters, including…

State-sponsored actors: Geopolitical motives drive these groups, such as espionage, large-scale data theft, or even operational sabotage. Their budgets are deep, and their toolkits increasingly rely on AI for automated reconnaissance and exploitation. APT28 (Russia) and APT34 (Iran) are notorious examples, and 87% of organizations have reported breaches linked to such persistent actors in the last year3.

Cybercrime syndicates: Crime rings now use AI to automate lucrative campaigns, including AI-generated ransomware, spear phishing, and credential stuffing at scale. They often rent or sell “AI-as-a-service” tools to lower-tier criminals.

Hacktivists: Motivated by ideology rather than money, hacktivists use AI to amplify their disruptions. While less resourced, AI’s scalability lets them punch above their weight.

Insiders: Not all dangers come from outside. Internal staff, whether malicious or negligent, can use AI to exfiltrate data or bypass controls with unprecedented cunning.

The World Economic Forum notes that this expanding spectrum of adversaries drives a surge in both the sophistication and volume of global cyber threats, making a strong argument for businesses to adopt equally smart defenses4. Real-world threat actor campaigns using AI, such as the targeted deepfake scams documented by CrowdStrike and PurpleSec, vividly underscore the stakes1,2.

For a strategic view of today’s cybersecurity threat landscape, the World Economic Forum Centre for Cybersecurity is well worth reviewing.

Technical, Ethical, and Regulatory Challenges in AI Cybersecurity Adoption

If defending your business sounds like it requires both a computer scientist and a philosopher, you’re not wrong. The integration of AI into cybersecurity is full of promise, but organizations face a minefield of technical snags, ethical dilemmas, and regulatory curveballs.

Technical Challenges: Bias, False Positives, and Detection Accuracy

Not all AI magic is reliable. AI cybersecurity tools are only as good as the data they’re trained on—if that data is biased, incomplete, or too narrow, even the most advanced system can misfire. According to analysis from Palo Alto Networks, AI-powered detection engines often struggle with bias inherited from historic security event logs. This bias can result in “overfitting”—where the AI spots anomalies that are not real threats, or worse, misses entirely new attack patterns. PurpleSec points out that AI-based security systems are particularly vulnerable to high rates of false positives, overwhelming security teams with non-threat alerts, leading to “alert fatigue” and the real danger of missing a genuine attack amidst the noise2,5.

Practitioners developing solutions for SentinelOne and similar vendors have made strides with improved machine learning algorithms and hybrid AI+human review systems. These approaches help filter out noise and spotlight the true anomalies, but no system is foolproof. AI still needs a chaperone—preferably one who drinks their coffee black and doesn’t mind reviewing logs at 2 a.m.

Ethical Concerns: Transparency, Accountability, and Dual-Use Risks

With great power comes great… liability? The very same AI that detects ransomware could, in the wrong hands, become a supervillain. That’s the dual-use dilemma. There’s also a transparency gap, meaning when AI security systems make decisions—flagging an employee as suspicious, blocking a payment, or escalating an alert—how do you audit the black box?

PurpleSec’s ethical guidelines, spearheaded by Tom Vazdar, emphasize building AI solutions that prioritize explainability and traceability, ensuring that human operators can understand and challenge automated decisions2. CyberSaint’s interpretation of the NIST AI Risk Management Framework underscores the need for clear accountability structures, so businesses know who’s on the hook when the digital chips are down6. Privacy, trust, and human oversight have never been more paramount.

Navigating Regulatory and Compliance Barriers

The regulatory landscape is catching up—sometimes reluctantly—with the AI revolution. New and proposed frameworks, like the NIST AI Risk Management Framework (AI RMF), ISO 27001/27002, and regional government mandates, are driving heightened scrutiny over AI systems used in cybersecurity.

CyberSaint and NIST provide detailed guidance for managing AI-specific risk and ensuring compliance. Their consensus: Document everything, audit regularly, and harmonize AI cybersecurity policies with the latest industry frameworks6,7. Practical steps often include conducting regular risk assessments, maintaining comprehensive incident response plans, and aligning documentation with standards.

For robust, actionable frameworks, consult the NIST AI Risk Management Framework or CyberSaint’s NIST AI RMF Playbook. Businesses should also stay up to date with CISA’s Best Practices Guide for Securing AI Data, especially critical as more sensitive business data fuels AI operations.

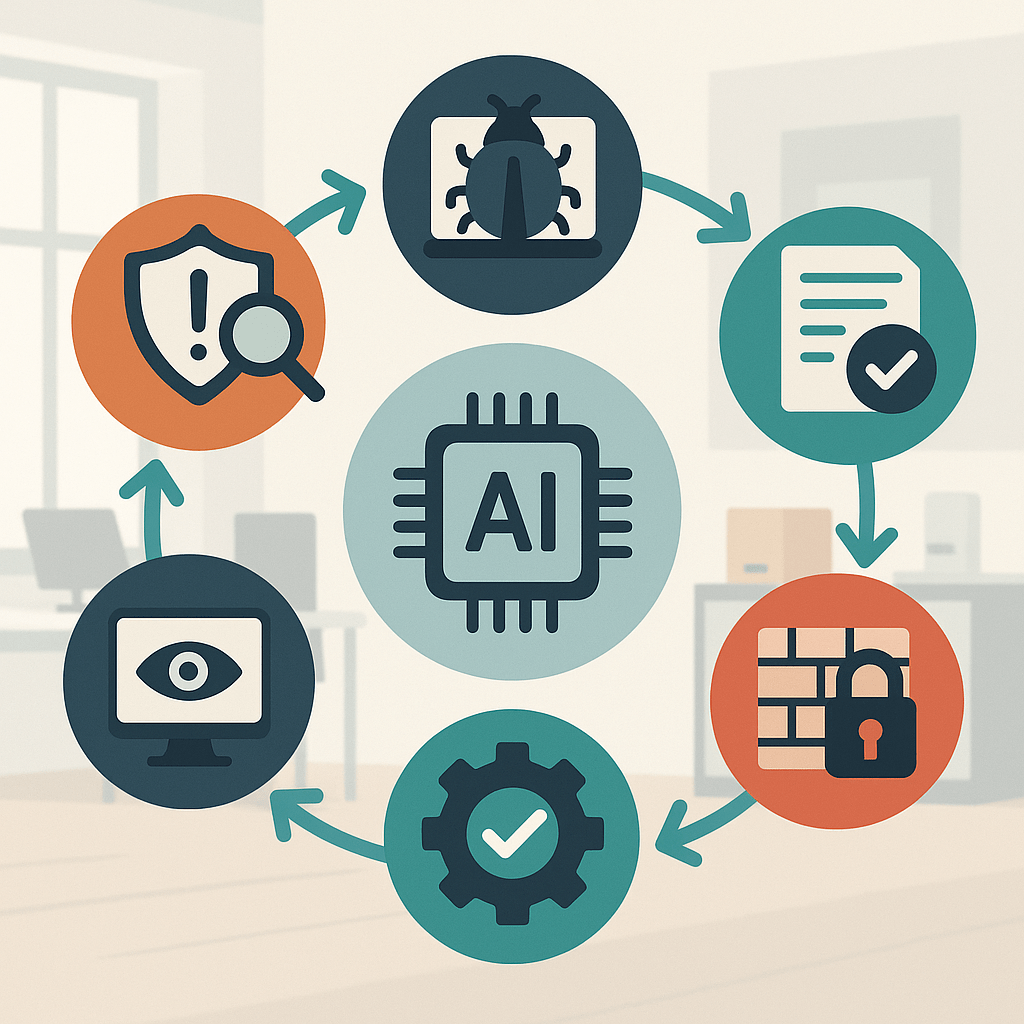

Strategic AI-Powered Cybersecurity Solutions and Tools for Prevention and Detection

If the previous section made AI-powered threats sound formidable, here’s the good news: The best defense is, in fact, a good offense (with a side order of machine learning). AI-driven cybersecurity solutions offer real, quantifiable benefits—detecting sophisticated threats faster, reducing costs, and freeing security professionals to focus where human judgment counts most.

Leading AI Threat Detection and Response Technologies

The race is on among vendors to deploy the smartest, most responsive AI security tools. Here are the standouts leading prevention and detection…

Darktrace: Powered by machine learning, Darktrace’s “immune system” model learns normal network behaviors, detecting threats that have never been seen before, even by global intelligence teams. This technology uses unsupervised learning and behavioral analytics to flag subtle anomalies with minimal configuration, making it a favorite among large enterprises looking to stamp out threats in real time8.

IBM Watson Security: IBM leverages AI for automated threat triage, rapid analysis of millions of security events, and predictive threat modeling. Case studies from IBM Security show organizations that implement Watson can reduce mean time to detect and respond to incidents, translating to an average of $2.2 million in annual savings versus manual-heavy approaches1,9.

SentinelOne and Palo Alto Networks: These platforms provide end-to-end endpoint protection using AI and machine learning for detection and response. SentinelOne’s product documentation shows highly scalable results for organizations of all sizes, with AI-based systems providing real-time alerts and integrating seamlessly with existing security stacks. Palo Alto Networks takes it further with Extended Detection and Response (XDR) platforms, orchestrating defense across endpoints, cloud, and network layers10,11.

For a more technical look at these solutions, see Darktrace’s AI Arsenal write-up, IBM Security’s AI overview, SentinelOne’s AI threat detection guide, and Palo Alto Networks’ educational resources.

Challenges and Best Practices for Implementing AI-Driven Security Solutions

Of course, AI-powered tools aren’t simply plug-and-play. Businesses often hit speed bumps when integrating new solutions, such as ensuring training data quality, managing system interoperability, and—yes, back to those pesky false positives—keeping the “cry wolf” syndrome in check.

Security professionals from SentinelOne and Palo Alto Networks advise careful vetting of data pipelines and investing in cross-platform integration to avoid creating blind spots. Change management and staff training are also critical. AI tools work best when paired with agile teams prepared to fine-tune parameters and respond when the system itself waves a red flag instead of a green light11.

PurpleSec’s best practices for AI adoption stress beta testing, staged deployment, and ongoing refinement. Their experts recommend collaboration with external consultants, regular plan reviews, and peer community engagement—because when it comes to AI, there’s still no “set it and forget it” magic button2.

Developing Comprehensive Business Security and Cybersecurity Preparedness Plans Integrating AI

Security isn’t just about stacks of technology—it’s about people, processes, and playbooks. Building a future-ready business means designing preparedness plans that pull together AI capabilities, regulatory compliance, incident response, and collaboration at every level.

Essential Components of an AI-Integrated Cybersecurity Preparedness Plan

Your preparedness plan can’t stop at “buy tools.” FEMA and NIST frameworks set the gold standard for structuring your readiness…

Risk Assessment: Start with identifying what you need to protect—your data, endpoints, cloud resources, and supply chain vendors. Then assess current vulnerabilities through AI-augmented analytics.

Detection Mechanisms: Implement AI-driven tools (see above) to spot advanced threats and feed insights into centralized reporting dashboards.

Containment Strategies: Plan for rapid isolation of affected systems using automated playbooks, minimizing contagion velocity.

Recovery Protocols: Map out restoration processes for various scenarios, including AI-encrypted ransomware attacks. Regularly back up data and test restore patterns.

Ongoing Monitoring: Use continuous AI-based behavioral monitoring to spot “out of band” activities, and review system logs for zero-day threats.

Compliance Checks: Regularly cross-reference plan documentation with NIST, FEMA, and ISO standards—because no inspector wants to hear, “I thought we were fine” after the fact.

PurpleSec’s expert-authored checklists and AI in Cybersecurity article emphasize the importance of tailoring each step to your actual business profile—those with remote teams, IoT fleets, or sensitive customer data may require special attention to AI-specific threat vectors2. The NIST Cybersecurity Framework provides structured templates to follow.

Employee Training and Threat Intelligence Collaboration

Even the best AI can’t fix user error—like clicking on that “urgent file” attachment. Modern preparedness relies on workforce education just as much as technology.

According to both the SBS Cyber Security Blog and CyberSaint’s NIST AI RMF playbook, regular, role-specific training in AI threat awareness and response protocols is essential. Training should cover recognizing sophisticated AI-generated phishing, escalation paths, and using secure collaboration tools. Interactive simulations can help employees learn to spot the real thing without the side effect of a data breach.

Most importantly, businesses should not operate in silos. Threat intelligence sharing—using secure, privacy-respecting channels—amplifies defenses for everyone, as collective experience is the best teacher. The JCDC AI Cybersecurity Collaboration Playbook offers practical frameworks for unified industry response and communication during incidents.

Aligning Preparedness Plans with Regulatory and Compliance Frameworks

Regulators expect (and auditors demand) that your preparedness plan isn’t collecting digital dust. Stay current. Review and update documentation with every significant tech or process change, and after notable external threat advisories. Key standards for 2025 include…

NIST AI Risk Management Framework: Offers a stepwise approach for incorporating trustworthy AI into cybersecurity planning, outlining risk identification, assessment, management, and governance measures7.

ISO 27001/27002: Covers information security management, risk management, and documentation best practices for businesses of all sizes. Details are at ISO’s information security standards.

Regular Auditing: Routinely schedule updates, internal reviews, and third-party audits to maintain both readiness and peace of mind.

As CyberSaint and NIST agree, a well-documented, regularly updated plan is your best legal (and operational) defense in front of regulators and, more importantly, in recovering from an incident6,7.

Managing Increasing Complexity and Human Factors in Cybersecurity Amid AI Advancements

As AI weaves itself into the digital fabric, cybersecurity’s complexity levels up—sometimes to “boss battle” status. But complexity isn’t destiny. With the right strategy and a touch of managerial finesse, organizations can tame the chaos.

Factors Driving Increased Cybersecurity Complexity

The digital transformation buffet is all-you-can-eat, and with every new dish—hybrid cloud environments, IoT sensors, interconnected supply chains—comes a heaping portion of attack surface risk. The World Economic Forum reports a sevenfold increase since 2022 in small organizations citing insufficient cyber resilience, now at 35%4. The proliferation of endpoints, remote work, and bring-your-own-device policies have created sprawling ecosystems where one vulnerable device or account could serve as a cybercriminal’s golden ticket.

Hybrid clouds provide agility, but require new coordination across physical and digital perimeters. IoT devices, while boosting business intelligence, often ship with weaker security controls, making them favored entry points. SentinelOne and JPMorgan Chase’s corporate cybersecurity research reinforce that tackling these vulnerabilities demands a multi-layered, well-coordinated defense12,13.

Addressing Human and Organizational Challenges

No matter how sharp your algorithms, humans remain, for better or worse, the “soft target.” Workforce skill shortages, the relentless deluge of alerts (many AI-generated), and the fatigue that follows can all sabotage even the most advanced security programs. It turns out, technology is only as strong as the people implementing, monitoring, and reacting to it.

PurpleSec and CyberSaint experts advocate regular upskilling, human-AI teaming, and building a security culture embedded in business DNA2,6. Block alert fatigue by segmenting and prioritizing alarms so that critical threats are surfaced and routine events are delegated to automated workflows.

Effective cybersecurity governance is the linchpin. Leadership must set a cadence of security reviews, empower managers to make fast decisions, and incentivize reporting—because “see something, say something” also applies to digital anomalies.

For a comprehensive overview of global cybersecurity trends—along with additional strategies for mitigating complexity—visit the World Economic Forum’s cybersecurity trends page.

Conclusion

The gauntlet has been thrown. AI has turbocharged both cyber threats and defenses in 2025, turning the digital battlefield dynamic, contentious, and never dull. Your organization’s survival depends on moving beyond outdated security approaches. By understanding the nuances of AI-powered attacks, deploying advanced AI-driven defense tools, building future-resilient preparedness plans, and facing complexity with strong governance, your business can stay not just compliant—but truly secure.

What’s next? Put this guide into action. Begin refining your preparedness plan, explore and test cutting-edge detection tools that fit your business, invest in continual employee training, and keep pace with evolving regulations through trusted frameworks like NIST and ISO. If you ever feel overwhelmed, remember—even the best AI still can’t replace the value of an engaged, informed human team.

Subscribe to trusted cybersecurity updates, network with industry specialists, and don’t hesitate to consult with AI cybersecurity experts. The threats may be getting smarter, but with this guide, so are you.

References

- CrowdStrike. (2025). “AI-Powered Cyberattacks.” CrowdStrike Cybersecurity 101. Retrieved from https://www.crowdstrike.com/en-us/cybersecurity-101/cyberattacks/ai-powered-cyberattacks/

- Vazdar, T. (2024). “AI In Cybersecurity: Defending Against The Latest Cyber Threats.” PurpleSec. Retrieved from https://purplesec.us/learn/ai-in-cybersecurity/

- Fortinet. (2025). “Advanced Persistent Threat (APT).” Fortinet Cyber Glossary. Retrieved from https://www.fortinet.com/resources/cyberglossary/advanced-persistent-threat

- World Economic Forum. (2025). “Centre for Cybersecurity.” World Economic Forum. Retrieved from https://www.weforum.org/centre-for-cybersecurity

- Palo Alto Networks. (2024). “Barriers to AI Adoption in Cybersecurity.” Palo Alto Networks Cyberpedia. Retrieved from https://www.paloaltonetworks.com/cyberpedia/what-are-barriers-to-ai-adoption-in-cybersecurity

- Siddiqui, M. (2025). “Aligning with the NIST AI RMF Using a Step-by-Step Playbook.” CyberSaint Security Blog. Retrieved from https://www.cybersaint.io/blog/nist-ai-rmf-playbook

- National Institute of Standards and Technology (NIST). (2023). “AI Risk Management Framework.” U.S. Department of Commerce. Retrieved from https://www.nist.gov/itl/ai-risk-management-framework

- Darktrace. (2025). “Technology: Network Immune System.” Darktrace Innovation Hub. Retrieved from https://darktrace.com/technology

- IBM Security. (2025). “Artificial Intelligence in Cybersecurity.” IBM Security Research. Retrieved from https://www.ibm.com/security/artificial-intelligence

- SentinelOne. (2025). “AI Threat Detection.” SentinelOne Cybersecurity 101. Retrieved from https://www.sentinelone.com/cybersecurity-101/data-and-ai/ai-threat-detection/

- Palo Alto Networks. (2025). “AI in Threat Detection.” Palo Alto Networks Cyberpedia. Retrieved from https://www.paloaltonetworks.com/cyberpedia/ai-in-threat-detection

- SentinelOne. (2025). “Cybersecurity 101: Issues & Trends.” Retrieved from https://www.sentinelone.com/cybersecurity-101/cybersecurity/cyber-security-issues/

- JPMorgan Chase. (2025). “12 Tips for Mitigating Cyber Risk.” Insights: Cybersecurity & Ransomware. Retrieved from https://www.jpmorgan.com/insights/cybersecurity/ransomware/12-tips-for-mitigating-cyber-risk